In our increasingly digital world, CAPTCHAs- “Completely Automated Public Turing tests to tell Computers and Humans Apart”- are a common feature designed to distinguish human users from bots. While these tests can sometimes be a mild inconvenience for users, they play a crucial role in maintaining the security and integrity of online services. But why are robots, which can perform complex calculations and tasks, unable to pass these seemingly simple tests? Let’s understand the reasons.

The Essence of CAPTCHAs

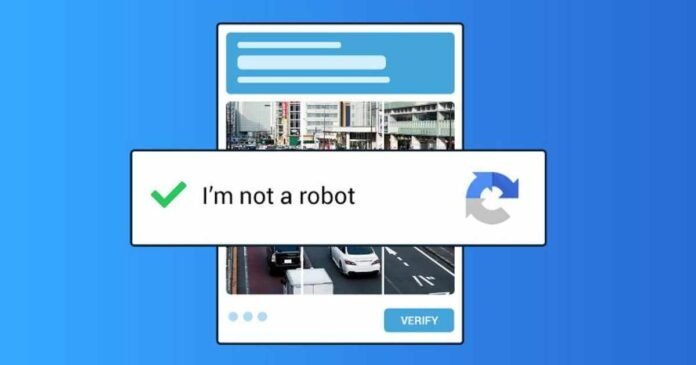

CAPTCHAs are designed based on tasks that are easy for humans but difficult for machines. They typically involve identifying distorted text, selecting images that fit a certain category, or solving simple puzzles. The fundamental principle behind CAPTCHAs is to use the unique cognitive abilities of humans—such as visual perception and understanding context—over the deterministic nature of machines.

The Challenge of Visual Perception

One of the primary types of CAPTCHA involves distorted text. Humans, with their advanced pattern recognition abilities, can usually decipher these distorted characters. However, robots struggle with this task because it requires a level of visual perception and contextual inference that current AI lacks. While AI has made significant strides in image recognition, the distortion and noise added to CAPTCHA images are specifically designed to exploit weaknesses in these algorithms.

Contextual Understanding

Another common type of CAPTCHA involves selecting images that match a certain description, like all images containing traffic lights. This task requires not only recognizing objects but understanding context—something that humans do intuitively but AI finds challenging. For instance, a human can understand that a partial image of a traffic light still counts as a traffic light, while an AI might fail if the object isn’t fully visible or doesn’t match its training data perfectly.

The Evolutionary Arms Race

As AI technology improves, CAPTCHAs also change. There’s a continuous battle between CAPTCHA developers and those attempting to create bots capable of passing these tests. For example, when text-based CAPTCHAs became vulnerable to advanced character recognition algorithms, developers shifted towards image-based CAPTCHAs and more complex puzzle-based challenges. This constant evolution ensures that CAPTCHAs remain a robust line of defence against automated attacks.

Machine Learning and Overfitting

Machine learning models, which are at the heart of modern AI, rely heavily on training data. These models can perform exceptionally well on tasks similar to their training data but struggle with variations or distortions they haven’t encountered before. CAPTCHAs exploit this limitation by presenting challenges that are intentionally designed to deviate from standard patterns, thus confusing the machine learning algorithms.

Ethical and Practical Considerations

There are ethical considerations as well. Developers of CAPTCHA systems must balance security with accessibility. Tests need to be challenging enough to deter bots but not so difficult that they frustrate human users or become inaccessible to those with disabilities. This delicate balance ensures that CAPTCHAs remain an effective tool without unduly hindering legitimate users.

Looking Ahead

Future advancements in AI could potentially make passing CAPTCHAs easier for robots. However, as AI evolves, so too will CAPTCHA technology. Emerging methods, such as using behavioral biometrics (analyzing the way users interact with devices) and continuous authentication (ongoing verification of user identity), promise to enhance security while reducing the need for traditional CAPTCHA challenges.

In conclusion, while robots and AI systems are becoming increasingly sophisticated, CAPTCHAs exploit the nuanced and context-driven aspects of human cognition that machines still struggle to replicate. This dynamic interplay ensures that CAPTCHAs remain a vital tool in the ongoing effort to secure our digital environments against automated threats.